Spark series introduces industry members to recent cutting-edge MIT AI and hardware projects with high potentialities for use-case in industry. Members can select to fund these projects.

October 2024

The Design and Fabrication of an AI Chip Optimized for State Space Models

We propose to develop a dedicated AI chip optimized for state space models, specifically targeting advanced architectures like Liquid Networks and Oscillatory State Space Networks. These models, which are designed for handling temporal sequences and dynamic systems, offer promising solutions in areas like real-time decision-making, adaptive learning, and tasks requiring memory or continuous input processing. This research will push the boundaries of hardware-software co-design, toward creating specialized processors for using AI state space models.

MIT Investigators

Daniela Rus

Andrew and Erna Viterbi Professor, Department of Electrical Engineering and Computer Science; Director, Computer Science and Artificial Intelligence Laboratory

Anantha Chandrakasan

Chief Innovation and Strategy Officer; Dean, MIT School of Engineering; Vannevar Bush Professor of Electrical Engineering and Computer Science

Our research goal is to optimize the AI Chip Architecture for AI State Space Models. We call this the AISSM chip. The AISSM chip will be designed to efficiently handle the matrix computations and complex feedback loops inherent in Liquid Networks and Oscillatory State Space Networks. Traditional hardware architectures struggle with the high temporal and spatial dependencies that these models generate. The new chip would prioritize real-time, low-latency processing and energy-efficient execution.

One of the key innovations will be the ability to process state-based, temporal computations natively on the chip. This is essential for models like Liquid Networks, which are sensitive to the sequence of inputs over time, making conventional chips suboptimal for their execution. The chip will include optimized memory hierarchies to store dynamic states and time-step calculations, leveraging parallel processing capabilities to improve the efficiency of tasks like recurrent learning, oscillatory pattern recognition, and control systems. Special attention will be given to handling high-dimensional inputs and the real-time updates necessary for state space models. A critical aspect of the program is optimizing the chip for low-power applications, enabling it to be deployed in edge devices or environments where computational resources are constrained. Power-saving algorithms for time-step-based learning and real-time state updates will be a major focus. The AI chip will be designed with scalability in mind, ensuring it can be integrated into larger systems or handle more complex networks. It will also feature flexibility in supporting various state space models beyond Liquid Networks and Oscillatory Networks, accommodating future advances in AI architecture.

The AISSM chip will enable machine learning on edge devices and real-time applications, such as robotics, autonomous systems, and adaptive AI systems, where fast response times and continuous learning are crucial. The optimized power usage will enable applications for edge computing. By optimizing hardware for Liquid and Oscillatory Networks, the research will enable the next generation of AI systems that better mimic biological systems and handle complex temporal patterns.

June 2024

MAIA: AI Neuron Explorer

A Multimodal Automated Interpretability Agent that autonomously conducts experiments on other systems to explain their behavior.

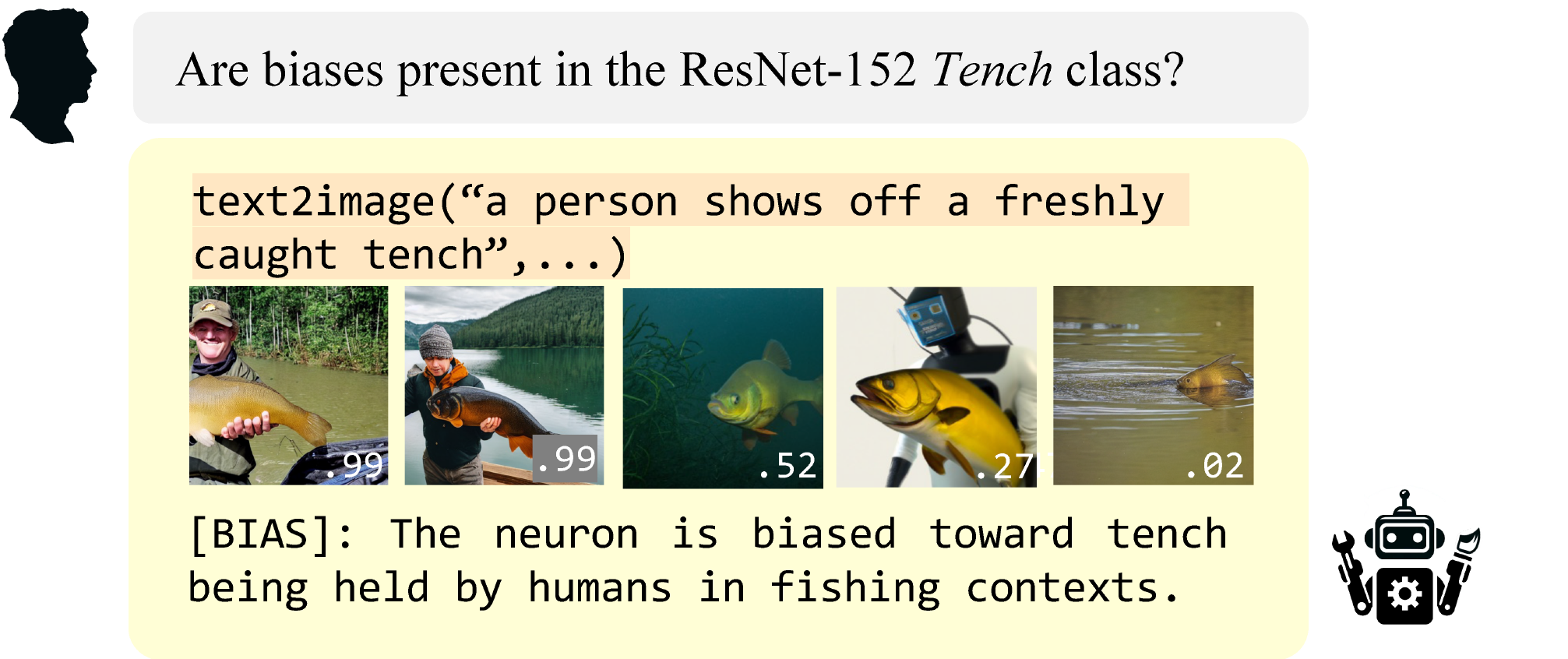

Meet MAIA – a Multimodal Automated Interpretability Agent that helps users understand AI systems. MAIA is a language-model based agent equipped with tools (i.e. python functions) commonly used by interpretability experts. Given a query, MAIA iteratively generates hypotheses, runs experiments, observes experimental outcomes, and updates hypotheses until it can answer the user query. Unlike other interpretability approaches, MAIA provides causal explanations of system behavior.

In our ICML’24 paper, we show how MAIA solves different interpretability tasks such as labeling the roles of components inside an AI system, detecting biases in classification networks and removing features with undesired correlations to make a system robust to domain shift. We view the application of MAIA to downstream model auditing and editing tasks as an important proof of concept that future AI agents will be able to help model providers and users answer open-ended questions about AI systems. Auditing models at industrial scale will certainly require tools like Interpretability Agents that address bottlenecks in human analysis of large and complex systems.

MIT Investigators

Tamar Rott Shaham, Sarah Schwettmann, Jacob Andreas, Antonio Torralba

How can AI systems help us understand other AI systems?

Interpretability Agents automate aspects of scientific experimentation to answer user queries about trained models.

Examples

Neuron Explorer

MAIA is a a Multimodal Automated Interpretability Agent. Watch MAIA run experiments on features inside a variety of vision models to answer interpretability queries.

AI Alignment

Performance Evaluation

Feature Impact Analysis: MAIA can dissect how different features impact the model’s performance. This helps in understanding which features are most influential and whether they align with logical expectations.

Comparison of Model Versions: By running experiments on different iterations of the same model, MAIA can help identify which changes enhance or degrade model performance, guiding development towards optimal configurations.

Applications

Potential Use Cases

MAIA can be easily applied to various domains, including:

Finance

Fraud Detection

MAIA can be employed to understand the decision-making process of AI models used in detecting fraudulent transactions. By interpreting how certain features influence decisions, financial institutions can refine their models to be more accurate and less prone to false positives, without the need for any model fine-tuning.

Credit Scoring Systems

Use MAIA to uncover the factors AI uses in determining credit scores. This can help in identifying unintended biases against certain demographic groups and improve the fairness of credit assessments.

Medical Care

Diagnostic AI Systems

In healthcare, MAIA can help clinicians understand how AI models predict diseases from medical imaging. This deep insight can lead to more accurate diagnoses and better patient outcomes by refining the features the models consider significant. MAIA can also take input from clinicians, for example by starting the experiment with an hypothesis from the clinic expert, or refining the experiment process according to the expert’s inputs.

Personalized Medicine

Given a generic AI system that assists with finding the most suitable treatment for a patient, MAIA can help customize the process by tailoring treatment plans to individual patient data, potentially revealing why certain treatments are recommended over others. This can be done by inspecting inner features in the AI model, without any additional training data or fine-tuning.

Autonomous Driving

Decision Making in Autonomous Vehicles

MAIA could be adapted to interpret the decision-making processes of autonomous driving systems, for example to explain why a vehicle chooses a particular path, or responds a certain way in real-time scenarios. This could lead to safer and more reliable autonomous driving technologies.

Systematic Error Identification

MAIA can help identify and analyze systematic errors in AI responses under various driving conditions, helping improve the robustness and safety of autonomous driving systems.

Energy

Smart Grid Management

MAIA can be used to understand the AI decision-making in smart grid management systems, helping to optimize energy distribution based on real-time data analysis.

Renewable Energy Forecasting

Interpret AI models that predict renewable energy outputs, such as wind or solar power, to improve the accuracy and reliability of energy forecasts.

Retail

Customer Behavior Prediction

Understanding how AI models predict customer behaviors can help retailers refine their marketing strategies. MAIA could reveal how different features influence predictions of customer needs and preferences.

Q&A

Browse the FAQ below. Submit additional questions through the form or email Emily Goldman, MIT AI Hardware Program Manager, at ediamond@mit.edu.

Submit a Question

How can MAIA scale to large models?

We think MAIA can be scaled to larger models. The main idea is that the agent can selectively deepen investigations based on preliminary results, which conserves resources. For most units, basic experiments (e.g. calling dataset exemplars) reveal enough information to decide their relevance to specific features. For example, let’s assume we want to identify all units in a vision model that are sensitive to the concept of “faces.” MAIA will scan all units within the model. When calling the “dataset exemplars” tool (a tool that feeds preselected images that cover a broad range of categories to the model) MAIA can quickly determine that most units show no activation or relevance to faces, thus eliminating the need for further testing on these units. For a subset of units that exhibit potential sensitivity to faces, MAIA can then design targeted, in-depth experiments.

How can a company contribute to MAIA development?

A company can contribute access to models or infrastructure to run large-scale experiments: this will depend on the type of task we wish to solve. For neuron labeling, it mostly requires working on efficient parallelization techniques. For other tasks that involve testing relations between different units within the model, we might need to include additional tools to efficiently track experiments (instead of keeping results in context).

What models did you test in the MAIA paper?

The models we’ve checked in the paper:

1. ResNet-152: CNN-based image classification network

2. CLIP-RN50: clip vision encoder (embed an image to the shared latent space)

3. DINO: A Vision Transformer trained for unsupervised representation learning

Please note that MAIA is not restricted to any specific architectural design and can work with different types of models.